3D human surface reconstruction aims to estimate human pose and shape, as well as reconstruct surface and clothing details, using computer vision and graphics methods from data collected by vision sensors. This process encompasses two key tasks: human pose and shape estimation, and clothed human surface reconstruction. The former typically involves estimating a parametric human model from images or videos, while the latter seeks to recover the real-world surface geometric details and texture information as accurately as possible, creating a digital twin of the real person. 3D human surface reconstruction has significant applications in mixed reality, virtual meetings, and film production.

For a long time, 3D human surface reconstruction has been a challenging research topic in the fields of computer vision and graphics, primarily due to the complexity and variability in human posture, body shape, surface geometry, and texture color. Traditional methods often use volumetric representations or predefined triangular meshes as human models, reconstructing the human body through multi-view stereo algorithms with images captured by multi-view systems. Our research focuses on developing efficient and practical 3D human body representation methods, leveraging these representations to recover human surface shapes from images or video sequences captured by RGB and depth cameras. We explore various possibilities for 3D human representation from different perspectives, such as spatial, temporal, and frequency domains, striving for a balance between reconstruction accuracy and algorithmic efficiency. Additionally, we emphasize the robustness of the reconstruction process and the lightweight nature of the algorithms, with a strong commitment to translating research findings into practical applications.

Selected Papers

|

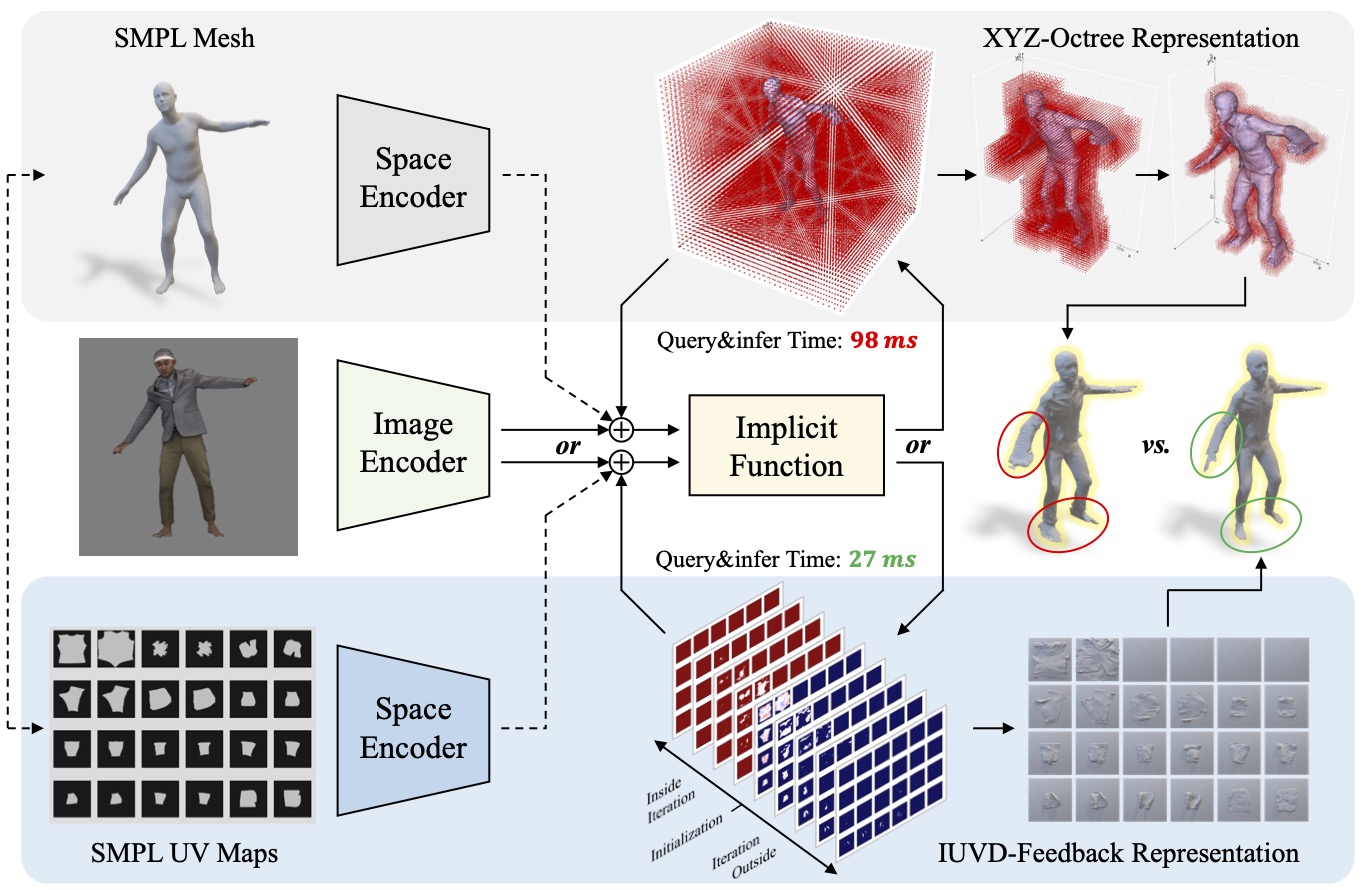

An Embeddable Implicit IUVD Representation for Part-based 3D Human Surface Reconstruction |

|

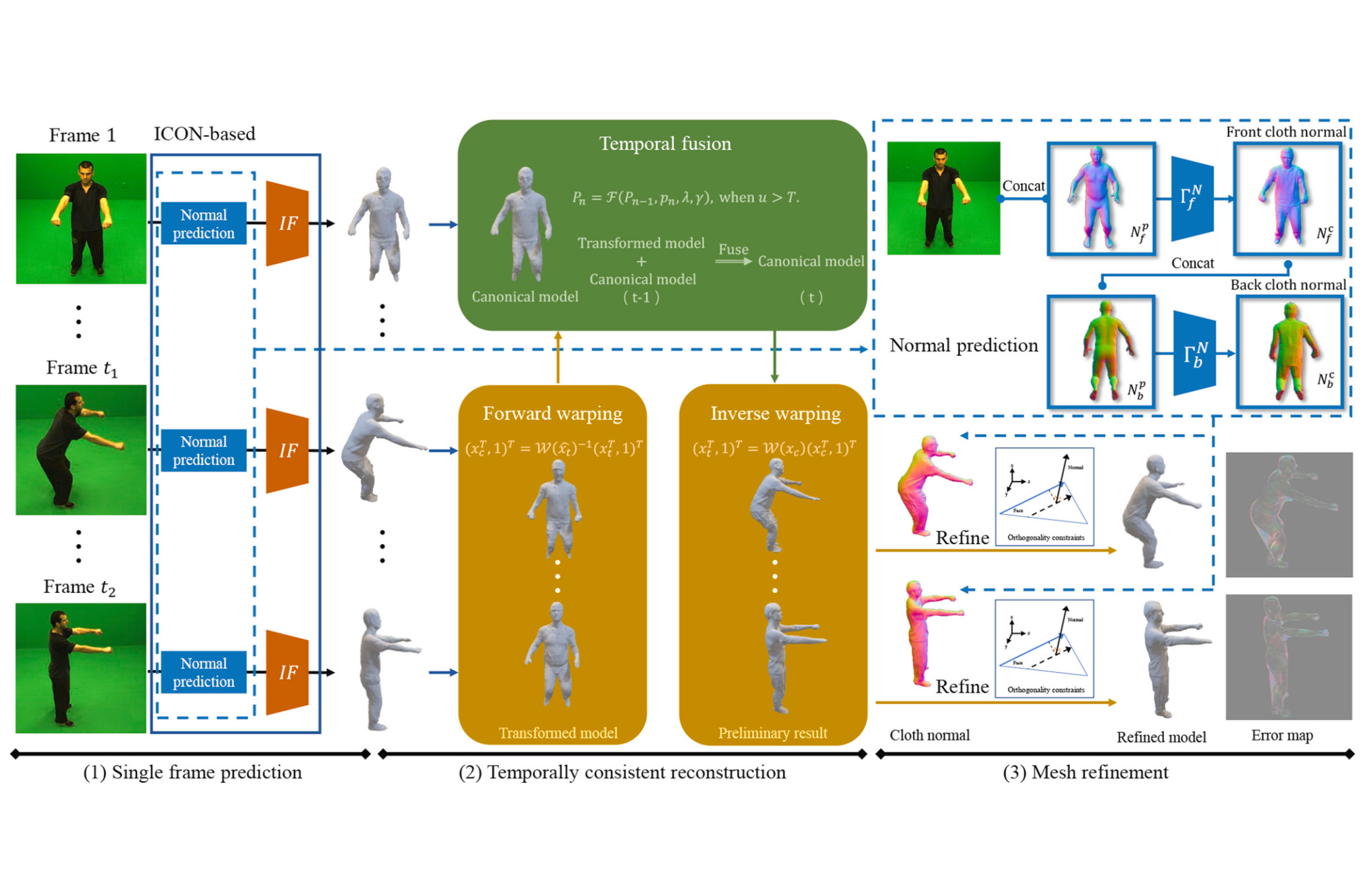

Temporally Consistent Reconstruction of 3D Clothed Human Surface with Warp Field |