An Embeddable Implicit IUVD Representation for Part-based 3D Human Surface Reconstruction

IEEE TIP 2024

Abstract

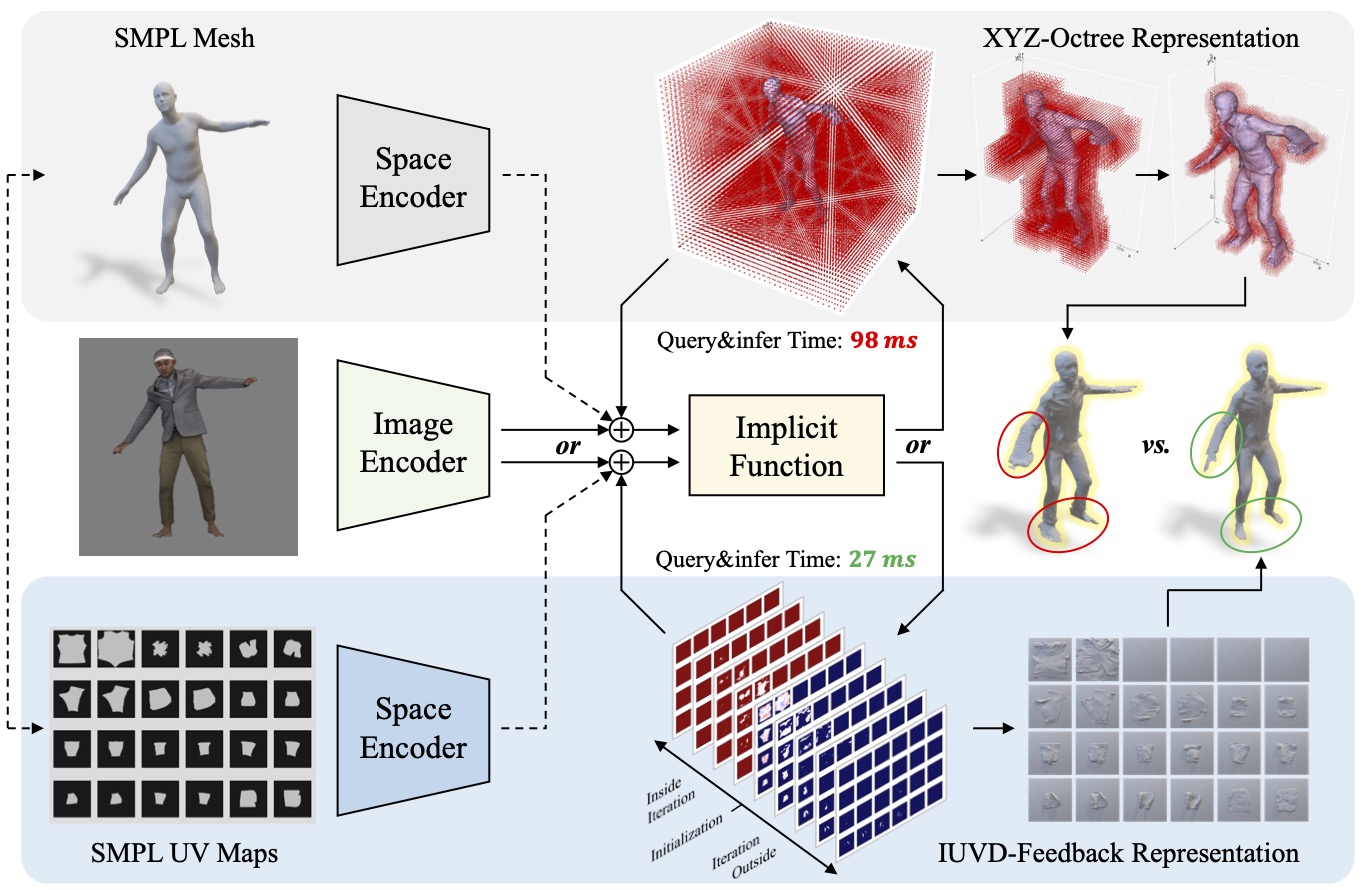

To reconstruct a 3D human surface from a single image, it is crucial to simultaneously consider human pose, shape, and clothing details. Recent approaches have combined parametric body models (such as SMPL), which capture body pose and shape priors, with neural implicit functions that flexibly learn clothing details. However, this combined representation introduces additional computation, e.g. signed distance calculation in 3D body feature extraction, leading to redundancy in the implicit query-and-infer process and failing to preserve the underlying body shape prior. To address these issues, we propose a novel IUVD-Feedback representation, consisting of an IUVD occupancy function and a feedback query algorithm. This representation replaces the time-consuming signed distance calculation with a simple linear transformation in the IUVD space, leveraging the SMPL UV maps. Additionally, it reduces redundant query points through a feedback mechanism, leading to more reasonable 3D body features and more effective query points, thereby preserving the parametric body prior. Moreover, the IUVD-Feedback representation can be embedded into any existing implicit human reconstruction pipeline without requiring modifications to the trained neural networks. Experiments on the THuman2.0 dataset demonstrate that the proposed IUVD-Feedback representation improves the robustness of results and achieves three times faster acceleration in the query-and-infer process. Furthermore, this representation holds potential for generative applications by leveraging its inherent semantic information from the parametric body model.

Overview

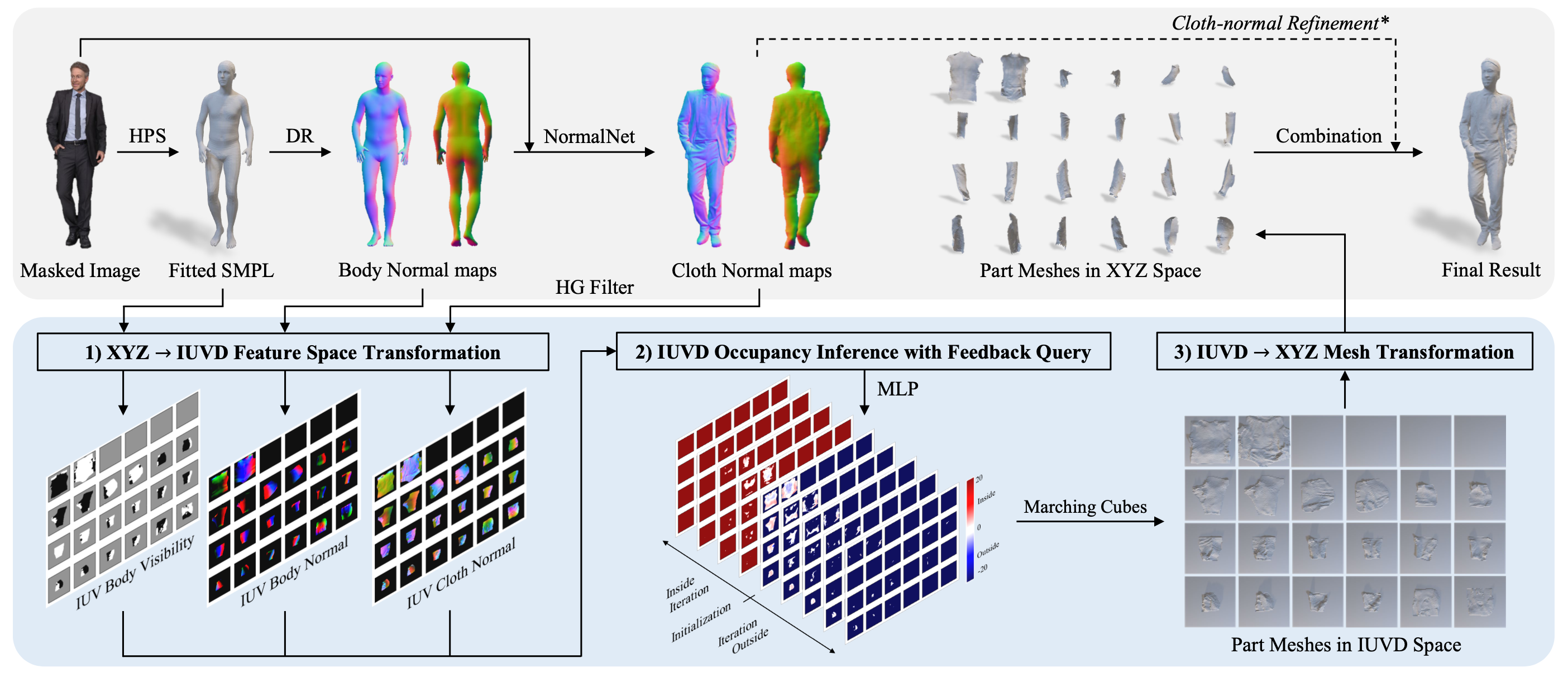

Given a masked color image, we first obtain the SMPL mesh, body normal maps and cloth normal maps. Then the implicit 3D human surface reconstruction process is restructured and accelerated in IUVD space by three steps: 1) XYZ to IUVD feature space transformation, 2) IUVD occupancy inference with feedback query, 3) IUVD to XYZ mesh transformation. Finally, the part-based meshes are combined and optionally refined in XYZ space as result.

Highlights

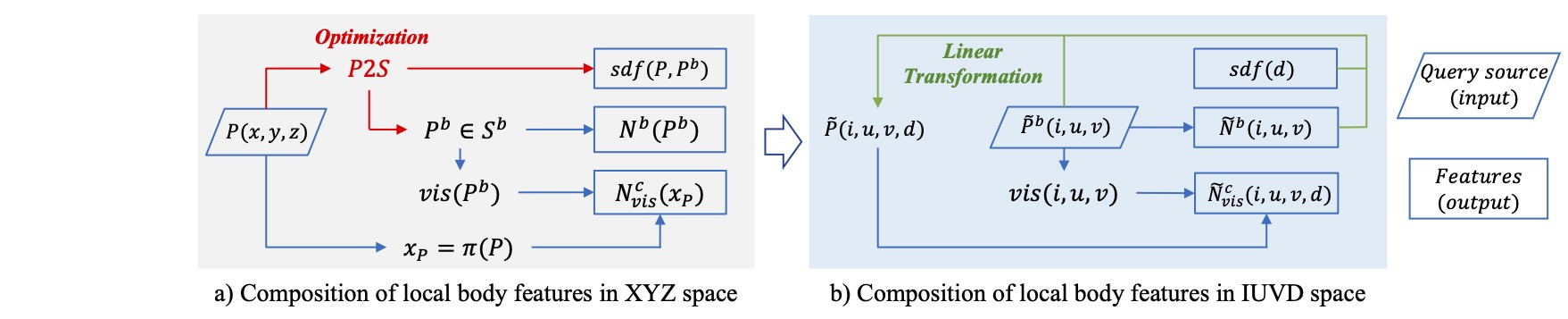

1) XYZ-IUVD Feature Space Transformation

By changing the source of query points, the local body features in XYZ space are transformed to IUVD space. And the time-consuming P2S optimization is replaced by a simple linear transformation for constructing the IUVD local body features as shown in the figure.

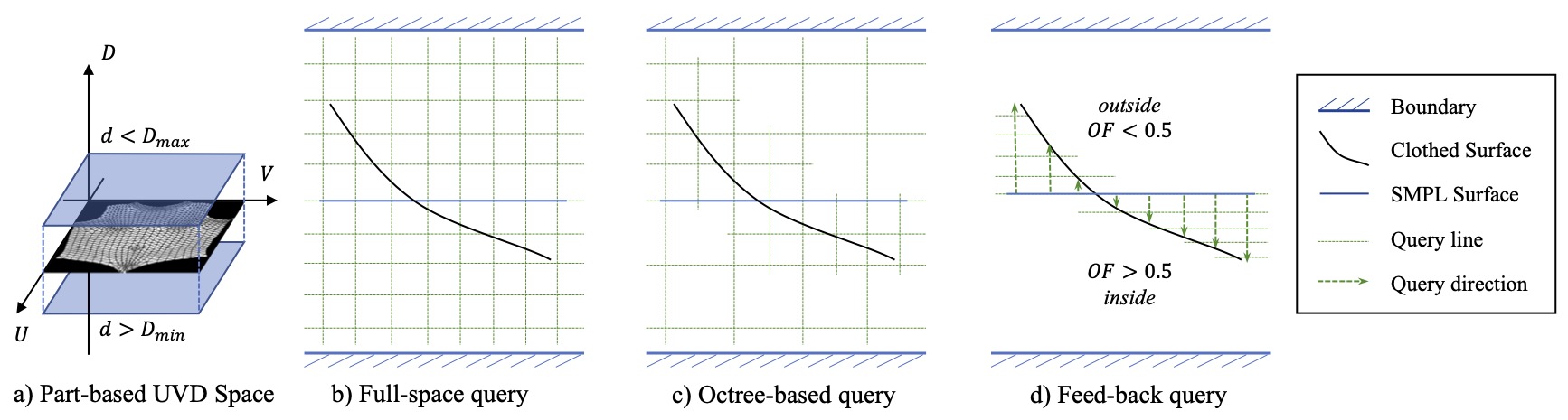

2) IUVD Occupancy Inference with Feedback Query

To localize the 3D human surface from the implicit IUVD occupancy function, we present a novel feedback query method to accelerate surface localization, which is faster than either full-space query or octree-based query.

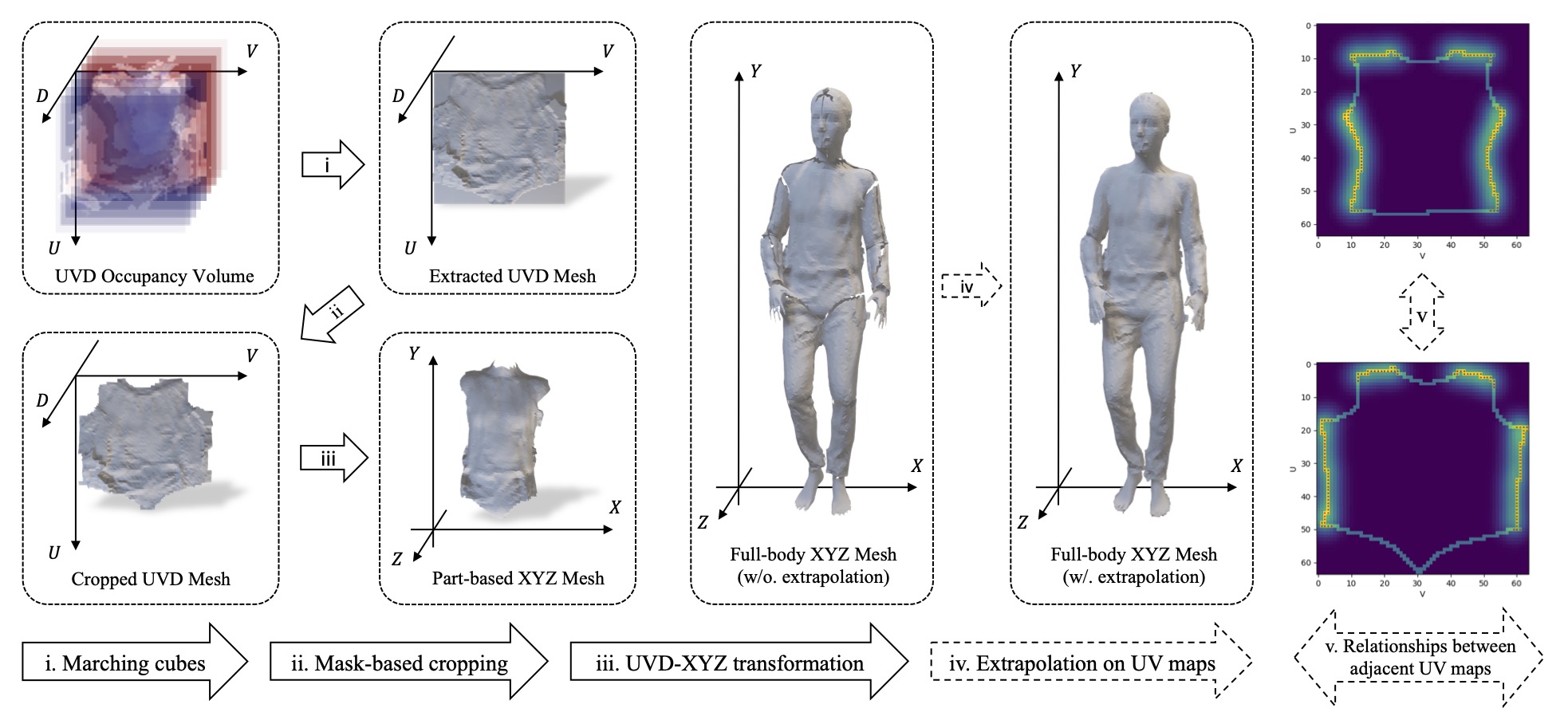

3) IUVD-XYZ Mesh Transformation

We design a realtime approach that combines offline dilation and online erosion steps to obtain a visually watertight result. The IUVD part meshes are extracted in IUVD space and transformed to XYZ space.

Experiments

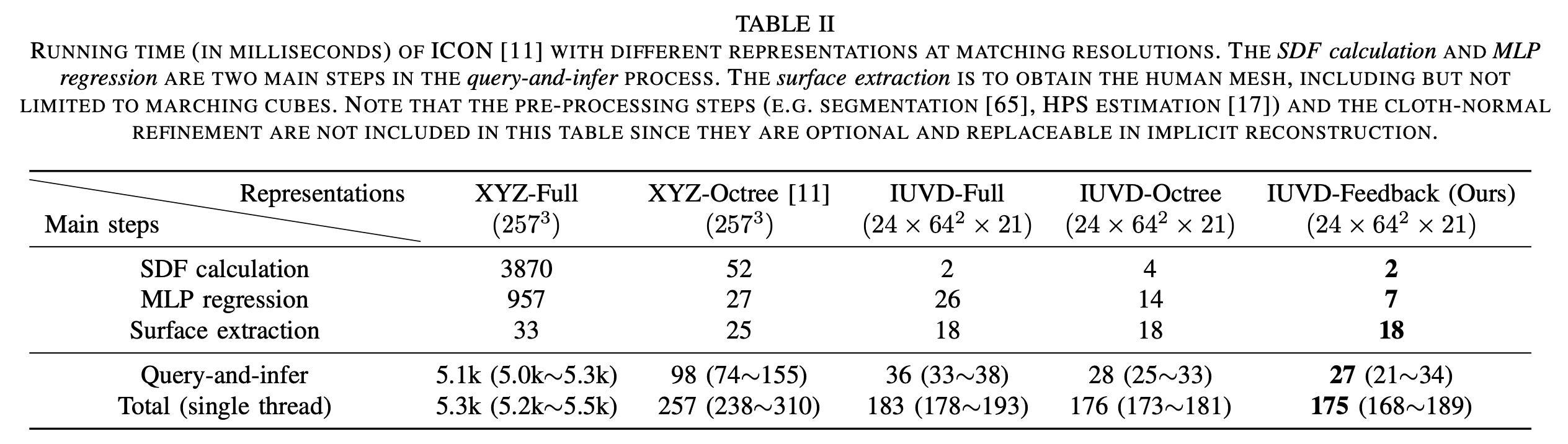

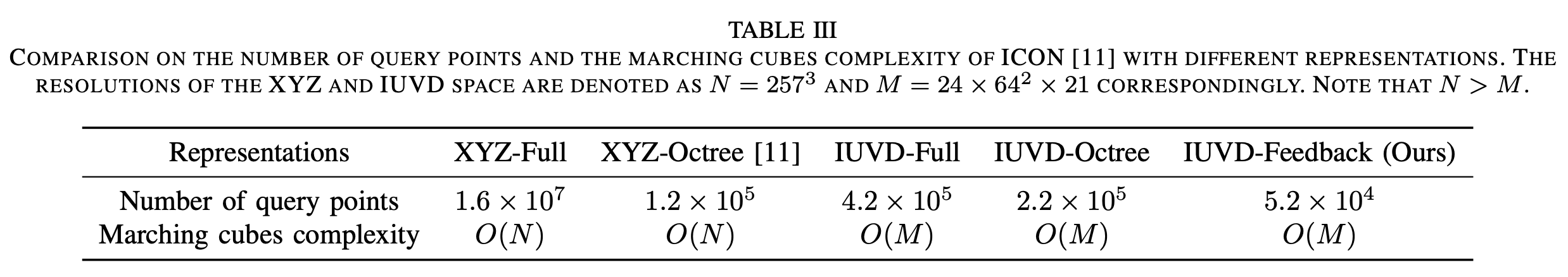

Reconstruction Speed Evaluation

Experiments show that the proposed IUVD-Feedback representation accelerates the query-and-infer process by three more times than baseline method.

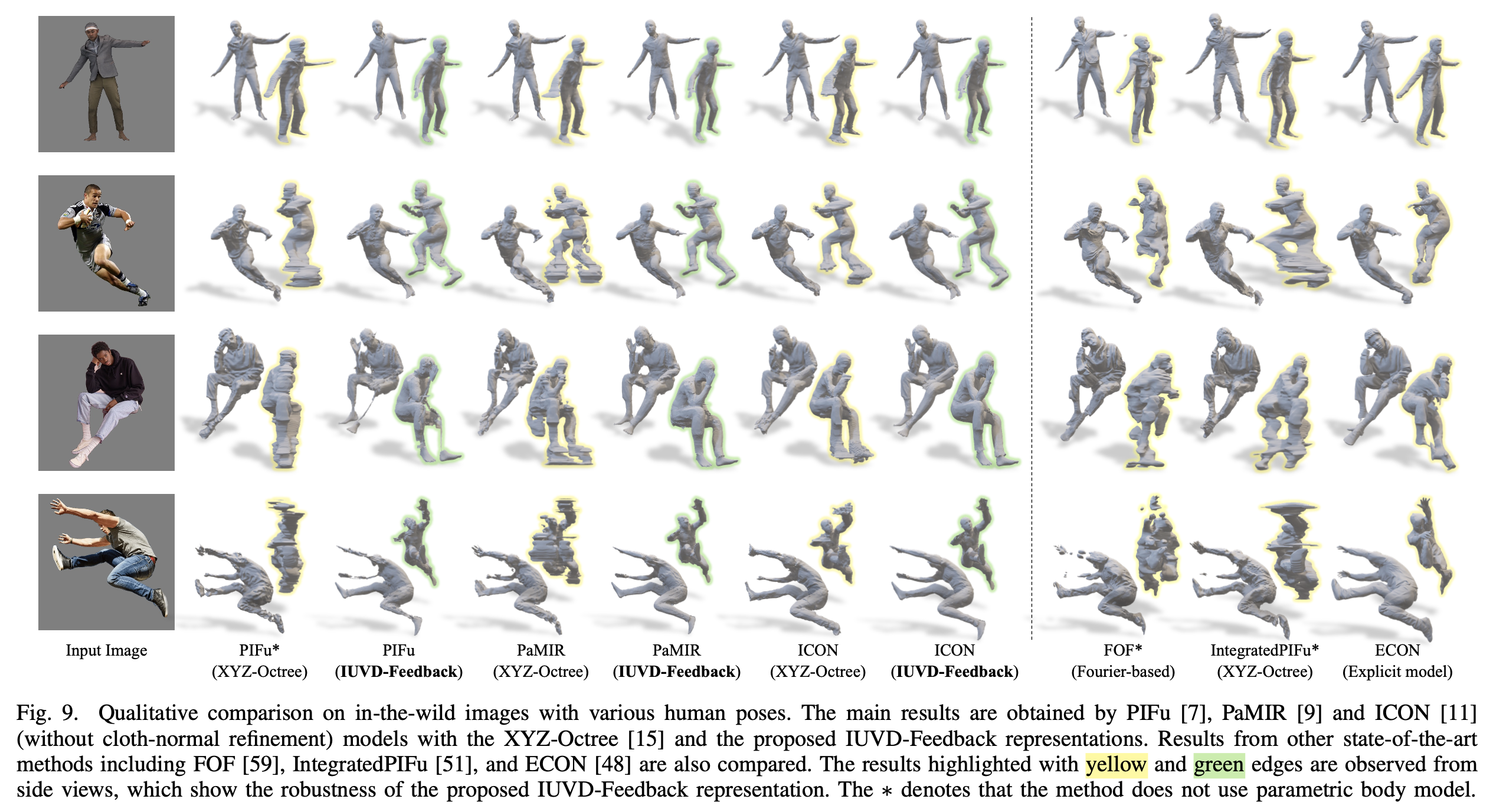

Reconstruction Accuracy Evaluation

As shown in the qualitative comparison, the proposed IUVD-Feedback representation improves the robustness of results without re-training the neural networks.

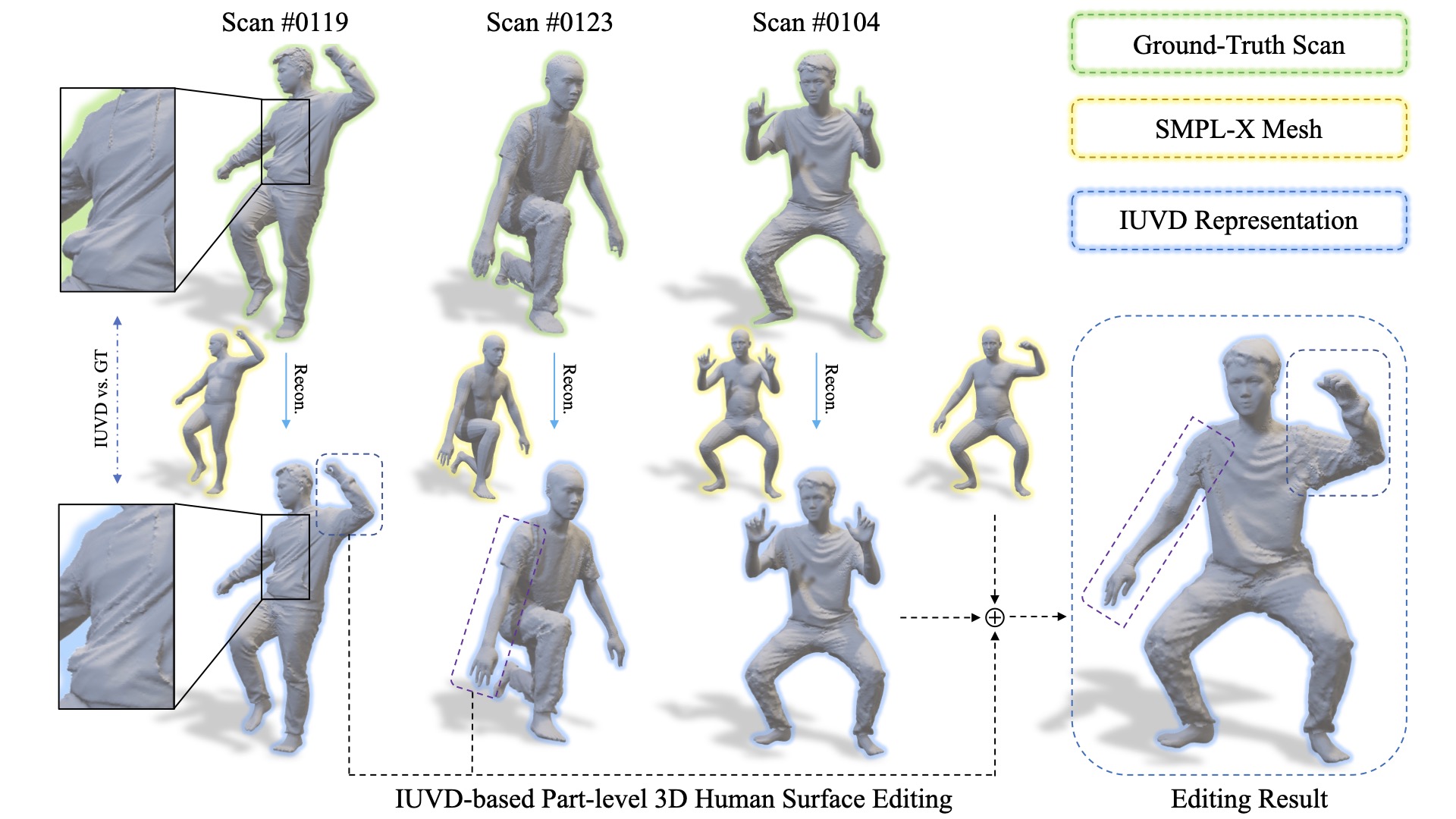

Part-based Editing Application

The proposed IUVD-Feedback representation reconstructs high-fidelity 3D scans and shows potential in part-based surface editing application.

Citation

@article{li2024embeddable,

title = {An Embeddable Implicit IUVD Representation for Part-Based 3D Human Surface Reconstruction},

author = {Li, Baoxing and Deng, Yong and Yang, Yehui and Zhao, Xu},

journal = {IEEE Transactions on Image Processing},

year = {2024},

volume = {33},

pages = {4334-4347},

}