View Consistency Aware Holistic Triangulation for 3D Human Pose Estimation

CVIU 2023

Abstract

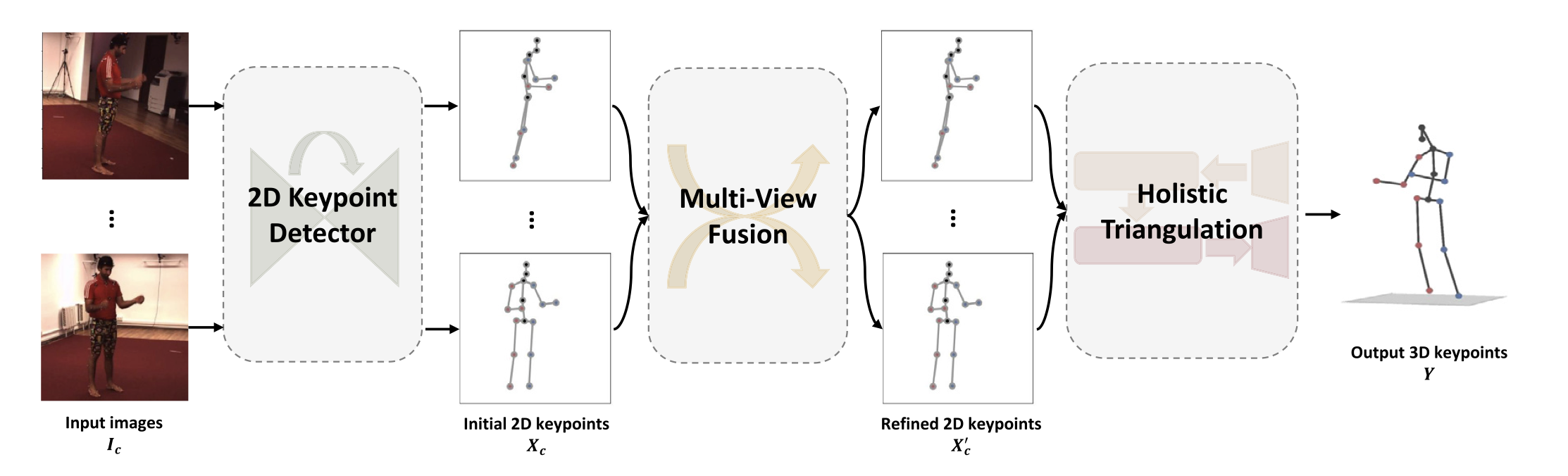

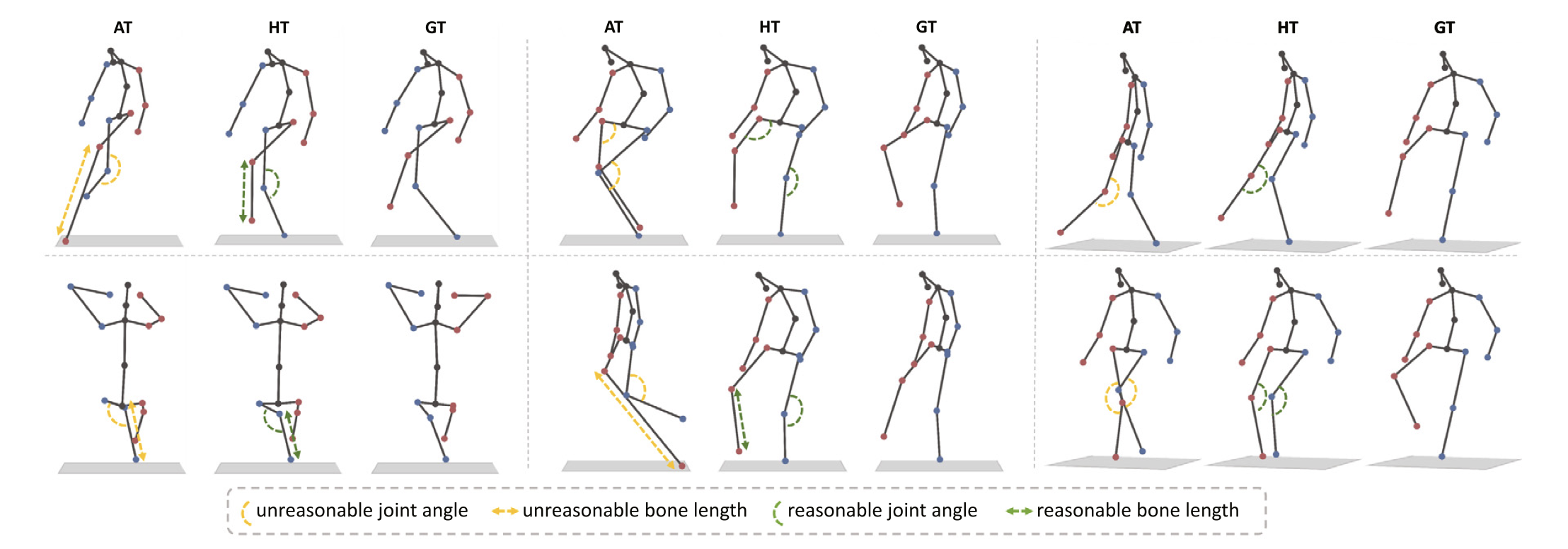

The rapid development of multi-view 3D human pose estimation (HPE) is attributed to the maturation of monocular 2D HPE and the geometry of 3D reconstruction. However, 2D detection outliers in occluded views due to neglect of view consistency, and 3D implausible poses due to lack of pose coherence, remain challenges. To solve this, we introduce a Multi-View Fusion module to refine 2D results by establishing view correlations. Then, Holistic Triangulation is proposed to infer the whole pose as an entirety, and anatomy prior is injected to maintain the pose coherence and improve the plausibility. Anatomy prior is extracted by PCA whose input is skeletal structure features, which can factor out global context and joint-by-joint relationship from abstract to concrete. Benefiting from the closed-form solution, the whole framework is trained end-to-end. Our method outperforms the state of the art in both precision and plausibility which is assessed by a new metric.

Highlights

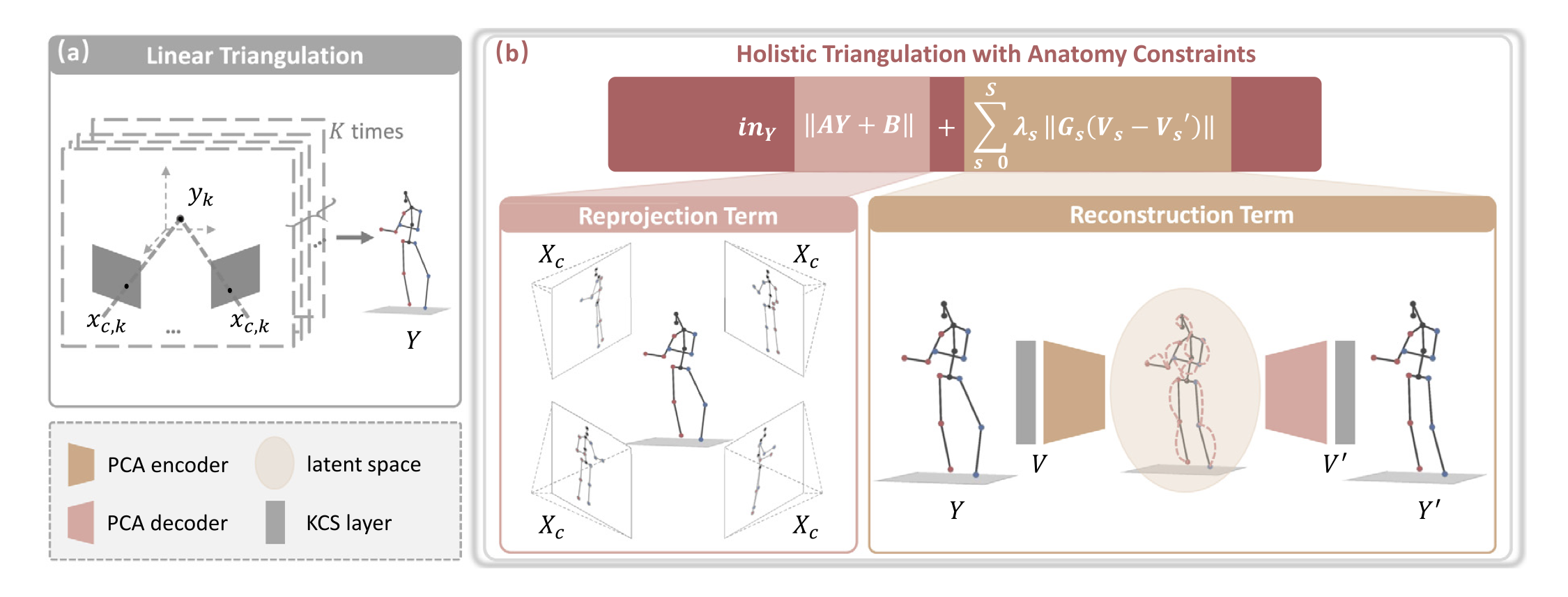

Holistic Triangulation with Anatomy Constraints

We introduce Holistic Triangulation, a method that reasons the entire pose collectively, in contrast to Linear Triangulation, which constructs 3D joints individually. To incorporate anatomy constraints, a PCA reconstruction term is included. And the data is transformed from the keypoint space to the skeletal structure space, strengthening the relationships between joints and introducing explicit features.

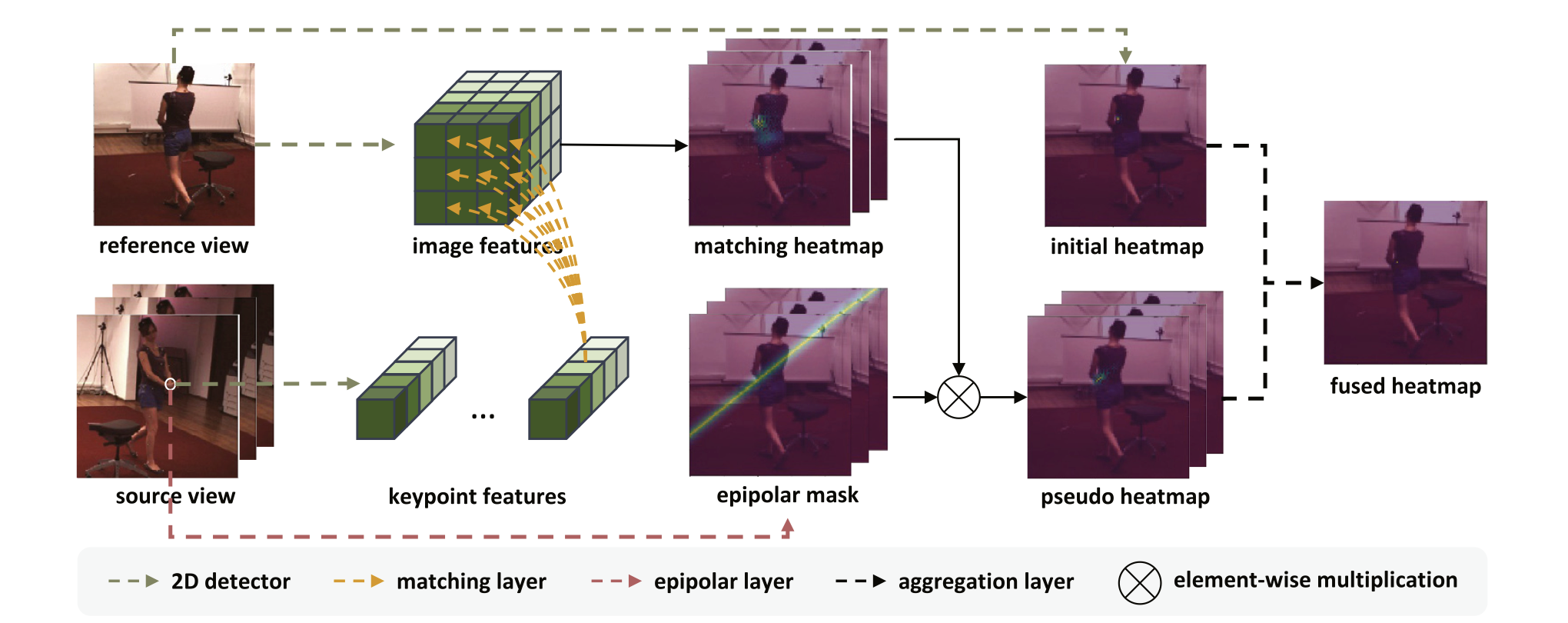

Multi-view Fusion 2D Keypoint Refinement

To improve cross-view consistency, we utilize pseudo heatmaps generated from other views to provide assistance. These pseudo heatmaps represent the probability of the source view keypoint being localized in the reference view.

Experiments

Citation

@article{wan2023view,

title = {View consistency aware holistic triangulation for 3D human pose estimation},

author = {Wan, Xiaoyue and Chen, Zhuo and Zhao, Xu},

journal = {Computer Vision and Image Understanding},

volume = {236},

pages = {103830},

year = {2023},

publisher = {Elsevier}

}